Optimizer still running

-

Hi @ support (Stefano)

Thank you for your message. No, I was not able to resolve the issue. If you figured out a solution, kindly post it here. If you want me to post my code again, let me know pls.

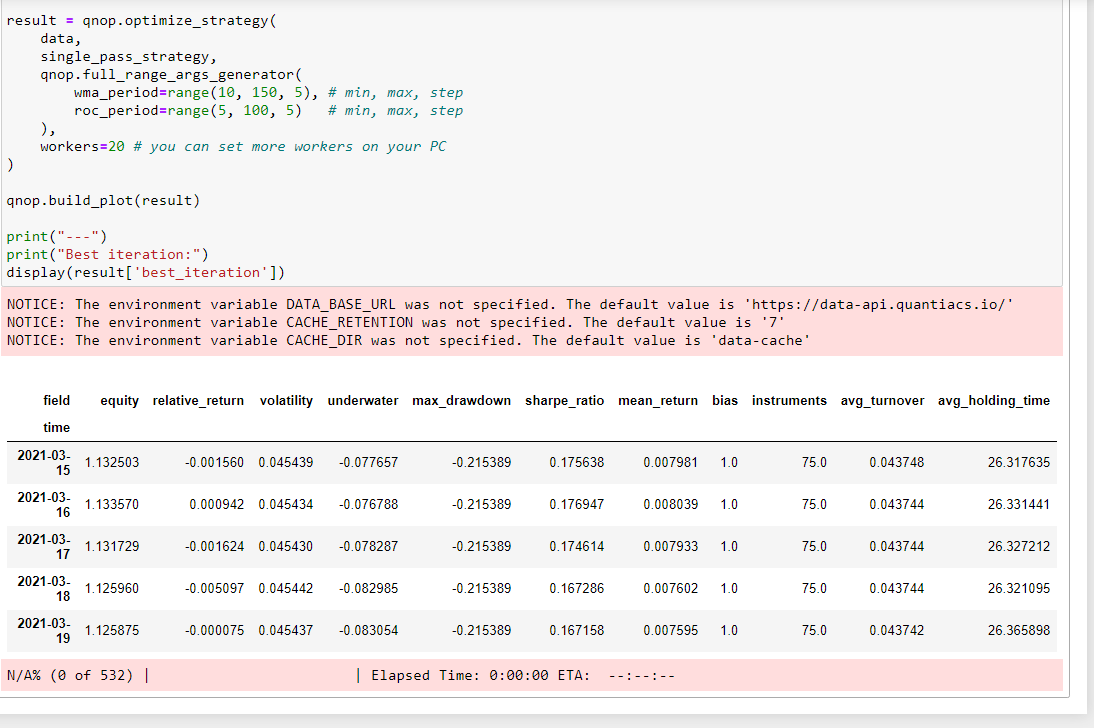

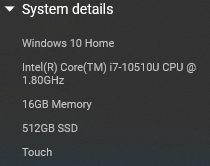

I decided to go back and try to get the original Quantiacs example itself to run but alas that too has been running for several hours 6+ now. Screenshot below. I tried using 20 workers, I don't know what's reasonable but my PC is pretty powerful. It's still running.

This is your example found in your documentation, no change. As you can see the first part ran & printed out ok.

-

Hello.

Could you provide more details, please?

What OS are you using?

What is your CPU?

How much RAM on your PC?

Also, run and send me the results from this command:

conda list -n qntdev

If progress is still zero and does not change, it looks like a problem with multiprocessing.Queue ...

Regards.

-

Hi @support

As requested:

(qntdev) C:\Users\sheik>conda list -n qntdev # packages in environment at C:\Users\sheik\anaconda3\envs\qntdev: # # Name Version Build Channel argon2-cffi 20.1.0 py37h2bbff1b_1 async_generator 1.10 py37h28b3542_0 atomicwrites 1.4.0 py_0 attrs 20.3.0 pyhd3eb1b0_0 backcall 0.2.0 pyhd3eb1b0_0 blas 1.0 mkl bleach 3.3.0 pyhd3eb1b0_0 bottleneck 1.3.2 py37h2a96729_1 brotli-python 1.0.9 py37h82bb817_2 ca-certificates 2021.1.19 haa95532_1 certifi 2020.12.5 py37haa95532_0 cffi 1.14.5 py37hcd4344a_0 click 7.1.2 pyhd3eb1b0_0 colorama 0.4.4 pyhd3eb1b0_0 dash 1.18.1 pyhd8ed1ab_0 conda-forge dash-core-components 1.14.1 pyhd8ed1ab_0 conda-forge dash-html-components 1.1.1 pyh9f0ad1d_0 conda-forge dash-renderer 1.8.3 pyhd3eb1b0_0 dash-table 4.11.1 pyhd8ed1ab_0 conda-forge decorator 4.4.2 pyhd3eb1b0_0 defusedxml 0.6.0 pyhd3eb1b0_0 entrypoints 0.3 py37_0 flask 1.1.2 pyhd3eb1b0_0 flask-compress 1.9.0 pyhd3eb1b0_0 future 0.18.2 py37_1 hurst 0.0.5 pypi_0 pypi icc_rt 2019.0.0 h0cc432a_1 importlib-metadata 2.0.0 py_1 importlib_metadata 2.0.0 1 iniconfig 1.1.1 pyhd3eb1b0_0 intel-openmp 2020.2 254 ipykernel 5.3.4 py37h5ca1d4c_0 ipython 7.20.0 py37hd4e2768_1 ipython_genutils 0.2.0 pyhd3eb1b0_1 itsdangerous 1.1.0 py37_0 jedi 0.17.0 py37_0 jinja2 2.11.3 pyhd3eb1b0_0 jsonschema 3.2.0 py_2 jupyter_client 6.1.7 py_0 jupyter_core 4.7.1 py37haa95532_0 jupyterlab_pygments 0.1.2 py_0 libsodium 1.0.18 h62dcd97_0 libta-lib 0.4.0 he774522_0 conda-forge llvmlite 0.31.0 py37ha925a31_0 m2w64-gcc-libgfortran 5.3.0 6 m2w64-gcc-libs 5.3.0 7 m2w64-gcc-libs-core 5.3.0 7 m2w64-gmp 6.1.0 2 m2w64-libwinpthread-git 5.0.0.4634.697f757 2 markupsafe 1.1.1 py37hfa6e2cd_1 mistune 0.8.4 py37hfa6e2cd_1001 mkl 2020.2 256 mkl-service 2.3.0 py37h196d8e1_0 mkl_fft 1.3.0 py37h46781fe_0 mkl_random 1.1.1 py37h47e9c7a_0 more-itertools 8.7.0 pyhd3eb1b0_0 msys2-conda-epoch 20160418 1 nbclient 0.5.3 pyhd3eb1b0_0 nbconvert 6.0.7 py37_0 nbformat 5.1.2 pyhd3eb1b0_1 nest-asyncio 1.5.1 pyhd3eb1b0_0 notebook 6.2.0 py37haa95532_0 numba 0.47.0 py37hf9181ef_0 numpy 1.19.2 py37hadc3359_0 numpy-base 1.19.2 py37ha3acd2a_0 openssl 1.1.1j h2bbff1b_0 packaging 20.9 pyhd3eb1b0_0 pandas 1.2.2 py37hf11a4ad_0 pandoc 2.11 h9490d1a_0 pandocfilters 1.4.3 py37haa95532_1 parso 0.8.1 pyhd3eb1b0_0 pickleshare 0.7.5 pyhd3eb1b0_1003 pip 21.0.1 py37haa95532_0 plotly 4.14.3 pyhd3eb1b0_0 pluggy 0.13.1 py37_0 progressbar2 3.37.1 py37haa95532_0 prometheus_client 0.9.0 pyhd3eb1b0_0 prompt-toolkit 3.0.8 py_0 py 1.10.0 pyhd3eb1b0_0 pycparser 2.20 py_2 pygments 2.8.0 pyhd3eb1b0_0 pyparsing 2.4.7 pyhd3eb1b0_0 pyrsistent 0.17.3 py37he774522_0 pytest 6.2.2 py37haa95532_2 pytest-runner 5.3.0 pyhd3eb1b0_0 python 3.7.10 h6244533_0 python-dateutil 2.8.1 pyhd3eb1b0_0 python-utils 2.5.6 py37haa95532_0 python_abi 3.7 1_cp37m conda-forge pytz 2021.1 pyhd3eb1b0_0 pywin32 227 py37he774522_1 pywinpty 0.5.7 py37_0 pyyaml 5.4.1 py37h2bbff1b_1 pyzmq 20.0.0 py37hd77b12b_1 qnt 0.0.228 py_0 quantiacs-source retrying 1.3.3 py37_2 scipy 1.6.1 py37h14eb087_0 send2trash 1.5.0 pyhd3eb1b0_1 setuptools 52.0.0 py37haa95532_0 six 1.15.0 py37haa95532_0 sqlite 3.33.0 h2a8f88b_0 ta-lib 0.4.19 py37h3a3b6f7_2 conda-forge tabulate 0.8.9 py37haa95532_0 tbb 2020.3 h74a9793_0 terminado 0.9.2 py37haa95532_0 testpath 0.4.4 pyhd3eb1b0_0 toml 0.10.1 py_0 tornado 6.1 py37h2bbff1b_0 traitlets 5.0.5 pyhd3eb1b0_0 vc 14.2 h21ff451_1 vs2015_runtime 14.27.29016 h5e58377_2 wcwidth 0.2.5 py_0 webencodings 0.5.1 py37_1 werkzeug 1.0.1 pyhd3eb1b0_0 wheel 0.36.2 pyhd3eb1b0_0 wincertstore 0.2 py37_0 winpty 0.4.3 4 xarray 0.16.2 pyhd3eb1b0_0 yaml 0.2.5 he774522_0 zeromq 4.3.3 ha925a31_3 zipp 3.4.0 pyhd3eb1b0_0 zlib 1.2.11 h62dcd97_4 -

@spancham Hi, it should be an issue on our side and Windows. We normally use Linux for development and testing, and in a Linux environment your code works ok. Please be patient, as soon as we finish reproducing the issue on a Windows environment we will let you know, and fix the issue.

-

@support

Cool thanks! -

Hello.

I noticed the error in the terminal:

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "C:\Users\User.conda\envs\qntdev\lib\multiprocessing\spawn.py", line 105, in spawn_main

exitcode = _main(fd)

File "C:\Users\User.conda\envs\qntdev\lib\multiprocessing\spawn.py", line 115, in _main

self = reduction.pickle.load(from_parent)

AttributeError: Can't get attribute 'single_pass_strategy' on <module 'main' (built-in)>It means, that

multiprocessingcan't find this function in the jupyter notebook. I dug into this question and found the reason.There is no

forkfunction in the OS Windows, so multiprocessing runs.pyfiles from the beginning. But.ipynbis not a simple.pyfile andmultiprocessingdoes not work correctly with it.In practice, it means that we have to extract functions (which we plan to run in a parallel manner) to the external

.pyfiles from.pynb.So I extracted the definition of

single_pass_strategy.import qnt.ta as qnta import qnt.output as qnout import qnt.log as qnlog import xarray as xr def single_pass_strategy(data, wma_period=20, roc_period=10): wma = qnta.lwma(data.sel(field='close'), wma_period) sroc = qnta.roc(wma, roc_period) weights = xr.where(sroc > 0, 1, 0) weights = weights / len(data.asset) # normalize weights so that sum=1, fully invested with qnlog.Settings(info=False, err=False): # suppress log messages weights = qnout.clean(weights, data) # check for problems return weightsAnd I placed an import instead of the function definition to the

.ipynb:from strategy import single_pass_strategyIt works.

Thank you, for your report. We will improve this example and add the necessary explanation.

Regards.

-

maybe, you need to install

ipywidgetsconda install ipywidgets -

@support

Thank you! I'll try that and let you know. -

This post is deleted! -

This post is deleted!