@support please let me know. I do want to hear from excellent builders like you guys.

Posts made by multi_byte.wildebeest

-

RE: Why we need to limit the time to process the strategy ?posted in Support

-

Why we need to limit the time to process the strategy ?posted in Support

Hi, I wonder why we need to limit the time to process our model (e.g the ML, DL should not exceed 10 minutes for both training and evaluation, models for crypto trading are not allowed to surpass 5 minutes ...) ?

Can you give me the justification for its necessity when employ/deploy these models in the real-world trading scenarios ?

Thanks,

-

Why we need to limit the time to process the strategy ?posted in Support

Hi, I wonder why we need to limit the time to process our model (e.g the ML, DL should not exceed 10 minutes for both training and evaluation, models for crypto trading are not allowed to surpass 5 minutes ...) ?

Can you give me the justification for its necessity when employ/deploy these models in the real-world trading scenarios ?

Thanks,

-

RE: WARNING: some dates are missed in the portfolio_historyposted in Support

@support Hi, but how about some dates are missed in the portfolio history when run the precheck ?

-

RE: WARNING: some dates are missed in the portfolio_historyposted in Support

@support thank you so much for your very clear explaination !

-

WARNING: some dates are missed in the portfolio_historyposted in Support

Hi, I am starting with Examples: Q18 : Supervised learning (Ridge Classifier).

I encountered the error : some dates are missed in the portfolio_history when run the precheck.ipynb file, whereas running the strategy.ipynb file is fine.

The resulting outcome is Sharpe in precheck is 0.25 << Sharpe in strategy.ipynb.

I think there is no forward looking, because: weights = qnbt.backtest_ml.

- Can you give me the possible reasons ?

- Can you please give me temporary way to resolve it ? (Assign some weights to missed dates)

-

RE: Differences between Sharpe in Precheck and Sharpe in strategy.ipynbposted in Support

@support yes, I just use qnt.backtest_ml, which as I know helps avoid forward looking.

You can check out my model and see : Sharpe in strategy, precheck, and IS are different although no forward looking.

Id :

dlsdcexp_416765536

Thanks,

-

Differences between Sharpe in Precheck and Sharpe in strategy.ipynbposted in Support

Hi, I am designing a deep learning model, and I want to get the estimated Sharpe.

I had different results between running Precheck.ipynb and strategy.ipynb.

I have tried like get_sharpe(data, weights) in strategy.ipynb as guided and got the same results as using backtest_ml.

So, what sharpe will be more close to the IS score (>= 1.0 for submission)

Thank you.

-

How can we have the estimation of Sharpe submitted ?posted in Support

Hi, how can we know the IS Sharpe will be when develop my strategy ?

For example, the backtest_ml prints Sharpe at the end, but as I know, it is the Sharpe of the last day of trading.

Many strategies developed locally have Sharpe > 1.0 but when submitted are filtered by IS Sharpe.

-

Missed call to write_output although had included itposted in Support

Hi, after developing my strategy, store it in "weights", I have included the following cell before submitting :

import qnt.output as qnout

weights = weights.sel(time=slice("2005-01-01",None))

qnout.check(weights, data, "stocks_nasdaq100")

qnout.write(weights) # to participate in the competitionBut I got missed call to write output filter.

Can you help me check my strategy with the id:

ndl01

16718690

Thanks,

-

Non Deep learning strategy filtered by Calculation time exceedposted in Support

Hello, I've been submitting my model to the competition, but filtered by Calculation time exceed. I do not use or train deep learning or machine learning models.

Please visit my strategy and help, id : al3 #16698570

Tks, -

Submitting Deep Learning model-Filtered by Calculation time exceededposted in Support

My DL models are filtered by Calculation time exceed.

I have tested it on Google Colab, and the time for it to run qnt.backtest (the last cell) is about 2-3 minutes (without considering some kind of pip install pandas, qnt libraries API).

(Other cells take 0s to run).Whereas the time for futures is up to 10 minutes.

My submission is made as follows : Click Jupyter, replace "strategy.ipynb" with my "strategy.ipynb" (delete three first cells of installing pandas, qnt libraries ... as suggested), add a new cell in "init.ipynb" : pip install torch.

Can you figure out the root of the problem and how to tackle it ? (you can use LSTM example which is analogous to my model).

-

RE: Printing training performance of neural network modelsposted in Support

@vyacheslav_b Thank you for your support. Can you get me an estimation of how big/ much time should our model be ? I run it over 20 years data, some models take 4 or 10 minutes, some take 30s -1 minute (all have Sharpe > 1.0). Can I know the highest Sharpe you achieve through these deep learning models ?

-

Printing training performance of neural network modelsposted in Support

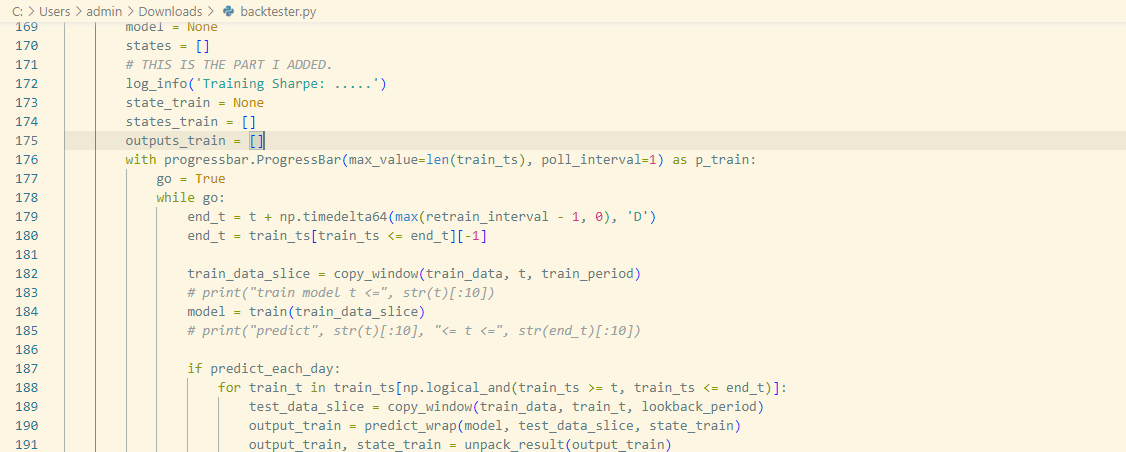

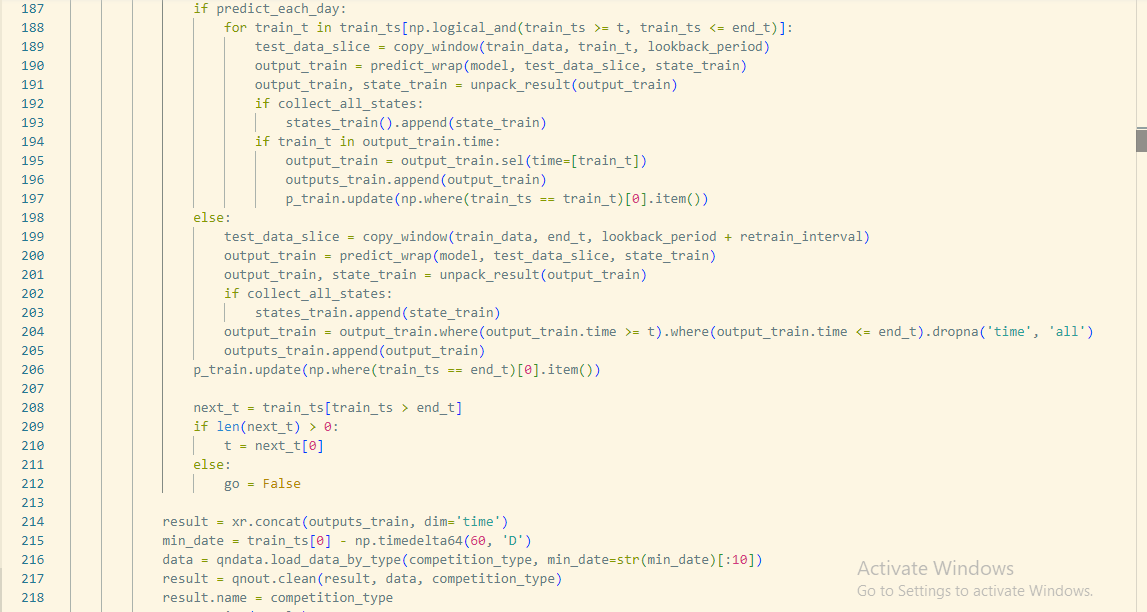

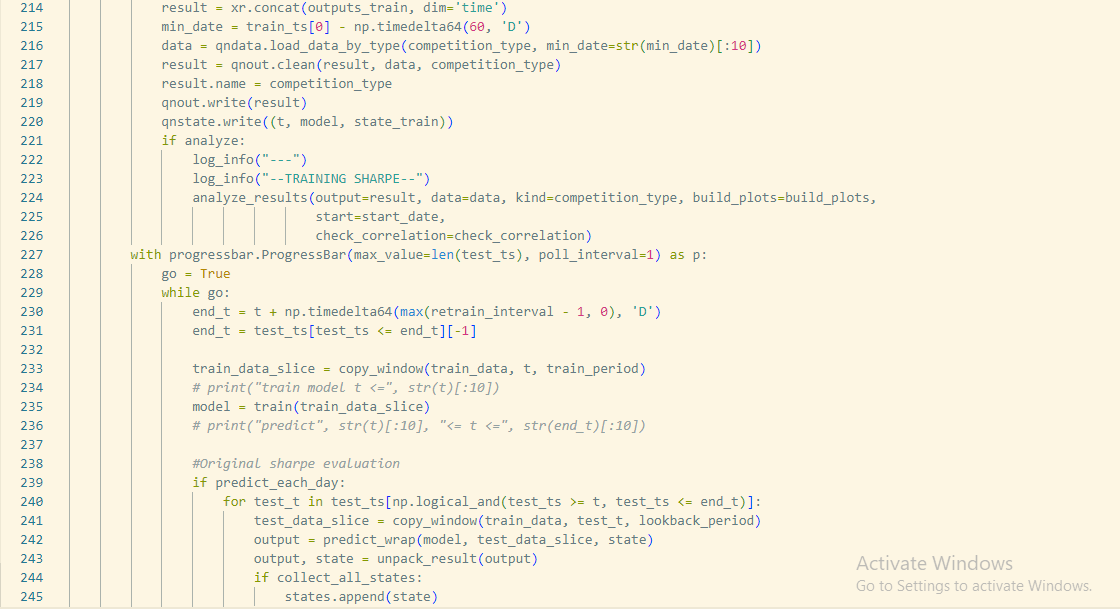

I am starting with a LSTM example model (In the example section). I want to print the Sharpe of the model on the training set to compare its Sharpe on test set (which is printed by default). To do that, I am editting the backtester.py file (specifically backtest_ml function) (You can check I added line 172-226 in the editted file for printing the Sharpe of the model on training set in the backtester.py file attached with this post).

But I got an unexpected result that I think I was doing st wrong : Sharpe of the training is 0.89 and Sharpe of the test set is -0.04; whereas if there was nothing changed in the backtester.py file , the Sharpe by default printed is 0.89.