Hi @support ,

sorry to bother you again but I have a new issue with my submissions.

On 2025-07-14 I submitted 3 strategies and they have been stuck at 99 % for 3 days now.

Yesterday I submitted another one and it's also stuck at 99 %

Could you please have a look?

One odd thing: meanwhile the ones from 2025-07-14 have processed the trading day for 2025-07-15 - I hope they don't wait for new data every day

Posts made by antinomy

-

RE: Strategy passes correlation check in backtester but fails correlation filter after submissionposted in Support

-

RE: Strategy passes correlation check in backtester but fails correlation filter after submissionposted in Support

@support All is looking good now, thanks!

-

RE: Strategy passes correlation check in backtester but fails correlation filter after submissionposted in Support

Hi @support ,

unfortunately the strategy is still not eligible for the contest, now it says "Competitions for the specified type are not running" and it's not selected to run in the contest.

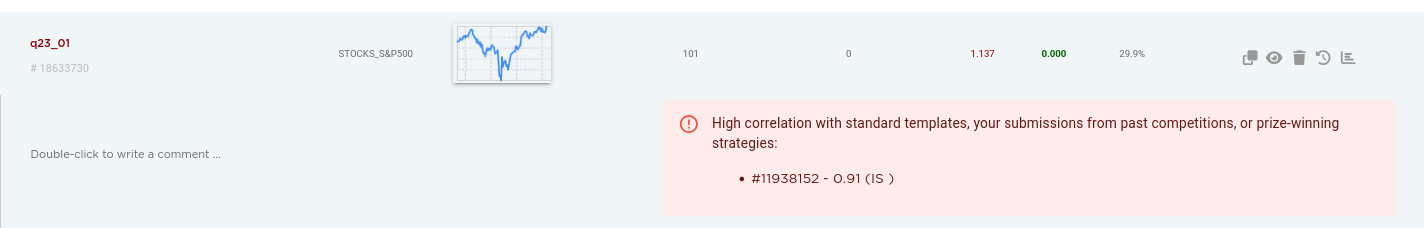

Just out of curiousity I cloned the strategy and submitted the clone, the result is the same as the original issue:

(the cloned stragegy can be deleted, I will eventually do so before the deadline)Can you share any insights as to why this happens at all?

Thanks. -

Strategy passes correlation check in backtester but fails correlation filter after submissionposted in Support

Hi @support,

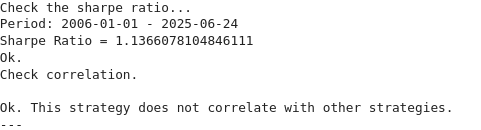

When I run my strategy withqnt.backtester.backtestsettingcheck_correlation=Trueit passes the correlation test.

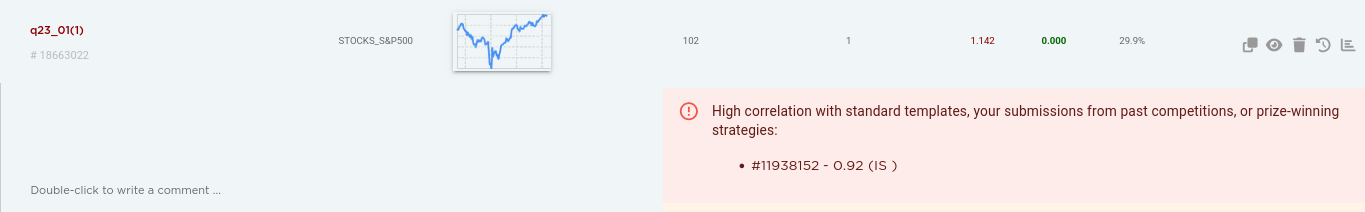

When I submitted it to the contest it got rejected and in the candidates tab it has a message about high correlation:

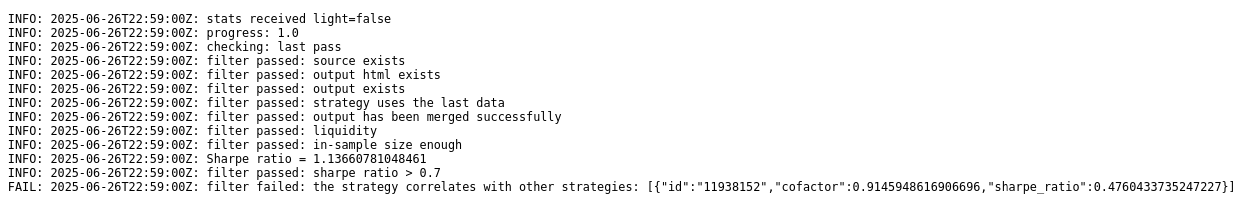

Looking at the short description in the strategy history it passes all tests on every trading day except for the last one (2025-06-24):

I don't quite understand why it passes the correlation check in the backtester but fails it after submission on the last day.

Which correlation test is wrong, the one from the backtester or the one running after submission?

If it helps, the strategy in question is a q 19 submission by another user and when I look at it there are only 87 data points.

My strategy is 18633730 and the one it has or has not a high correlation with is 11938152. -

RE: ERROR! The max exposure is too highposted in Support

@vyacheslav_b Hi, I agree that diversification is always a good idea for trading. It might be helpful if there was an additional function like

check_diversificationwhith a parameter for the minimum number of assets you want to trade. But this function should only warn you and not fix an undiversified portfolio, because the only way would be to add more assets to trade and asset selection should be done by the strategy itself in my opinion.@support Hi, I just checked out

qnt 0.0.504and the problem I mentioned seems to be fixed now, thanks!

Would you perhaps consider to add a leverage check to thecheckfunction?

Because one might think "qnout.check says everything is OK, so I have a valid portfolio" while actually having vastly overleveraged like in my 2nd example whereweights.sum('asset').values.max()is505.0.

Adding something like this tocheckwould tell us about it:log_info("Check max portfolio leverage...") max_leverage = abs(output).sum(ds.ASSET).values.max() if max_leverage > 1 + 1e-13: # (give some leeway for rounding errors and such) log_err("ERROR! The max portfolio leverage is too high.") log_err(f"Max leverage: {max_leverage} Limit: 1.0") log_err("Use qnt.output.clean() or normalize() to fix.") else: log_info("Ok.") -

RE: ERROR! The max exposure is too highposted in Support

@support,

Either something about the exposure calculation is wrong or I really need clarification on the rules. About the position limit I only find rule 7. o. in the contest rules which states "The evaluation system limits the maximum position size for a single financial instrument to 10%"I always assumed this would mean the maximum weight for an asset would be 0.1 meaning 10 % of the portfolio. However the exposure calculation suggests the following:

Either we trade no asset or at least 10 assets per trading day, regardless of the actual weights assigned to each asset.Consider this example:

import qnt.data as qndata import qnt.output as qnout from qnt.filter import filter_sharpe_ratio data = qndata.stocks.load_spx_data(min_date="2005-01-01") weights = data.sel(field='is_liquid').fillna(0) weights *= filter_sharpe_ratio(data, weights, 3) * .01 # assign 1 % to each asset using the top 3 assets by sharpe qnout.check(weights, data, "stocks_s&p500")which results in an exposure error:

Check max exposure for index stocks (nasdaq100, s&p500)… ERROR! The max exposure is too high. Max exposure: [0. 0. 0. ... 0.33333333 0.33333333 0.33333333] Hard limit: 0.1 Use qnt.output.cut_big_positions() or normalize_by_max_exposure() to fix.even though the maximum weight per asset is only 0.01

abs(weights).values.max() 0.01(By the way, the 4 functions mentioned by @Vyacheslav_B also result in weights which dont't pass the exposure check when used with this example, except

drop_bad_dayswhich results in empty weights.)And if we assign 100 % to every liquid asset, the exposure check passes:

weights = data.sel(field='is_liquid').fillna(0) qnout.check(weights, data, "stocks_s&p500")Check max exposure for index stocks (nasdaq100, s&p500)… Ok.So, does rule 7. o. mean we have to trade at least 10 assets or none at all each trading day to satisfy the exposure check?

-

RE: Some top S&P 500 companies are not available?posted in Support

The symbols are all in there, but if they are listed on NYSE you have to prepend

NYS:notNAS:to the symbol. Also, I believe by 'BKR.B' you mean 'BRK.B'[sym for sym in data.asset.values if any(map(lambda x: x in sym, ['JPM', 'LLY', 'BRK.B']))]['NYS:BRK.B', 'NYS:JPM', 'NYS:LLY']You can also search for symbols in

qndata.stocks_load_spx_list()and get a little more infos like this:syms = qndata.stocks_load_spx_list() [sym for sym in syms if sym['symbol'] in ['JPM', 'LLY', 'BRK.B']][{'name': 'Berkshire Hathaway Inc', 'sector': 'Finance', 'symbol': 'BRK.B', 'exchange': 'NYS', 'id': 'NYS:BRK.B', 'cik': '1067983', 'FIGI': 'tts-824192'}, {'name': 'JP Morgan Chase and Co', 'sector': 'Finance', 'symbol': 'JPM', 'exchange': 'NYS', 'id': 'NYS:JPM', 'cik': '19617', 'FIGI': 'tts-825840'}, {'name': 'Eli Lilly and Co', 'sector': 'Healthcare', 'symbol': 'LLY', 'exchange': 'NYS', 'id': 'NYS:LLY', 'cik': '59478', 'FIGI': 'tts-820450'}]The value for the key 'id' is what you will find in

data.asset -

RE: toolbox not working in colabposted in Support

I got the same error after installing

qntlocally with pip.

There is indeed a circular import in the current Github repo for the toolbox, introduced by this commit:

https://github.com/quantiacs/toolbox/commit/78beafa93775f33606156169b3e6b8f995804151#diff-89350fe373763b439e4697f9b11cceb811b4a3f0adc7a655707a936ce5646c01R6-R10

when some of the imports inoutput.pywhich were inside of fuctions before were moved to the top level.

Nowoutputimports fromstatsandstatsimports fromoutput.@support Can you please have a look?

@alexeigor @omohyoid

The conda version ofqntdoesn't seem to be affected, so if that's an option for you install that one instead.

Otherwise we can use the git version previous to the commit above:pip uninstall qnt pip install git+https://github.com/quantiacs/toolbox.git@a1e6351446cd936532af185fb519ef92f5b1ac6d -

RE: Error for importing quantiacs moduleposted in Support

!pip install --force-reinstall python_utilsshould fix the issue.

But I have no idea what would have caused it, the line in converters.py is totally messed up. The only thing that comes to my mind is a cat on the keyboard

-

RE: Why .interpolate_na dosen't work well ?posted in Support

@cyan-gloom

interpolate_na() only eliminates NaNs between 2 valid data points. Take a look at this example:import qnt.data as qndata import numpy as np stocks = qndata.stocks_load_ndx_data() sample = stocks[:, -5:, -6:] # The latest 5 dates for the last 6 assets print(sample.sel(field='close').to_pandas()) """ asset NYS:NCLH NYS:ORCL NYS:PRGO NYS:QGEN NYS:RHT NYS:TEVA time 2023-05-12 13.24 97.85 35.21 45.09 NaN 8.03 2023-05-15 13.71 97.26 34.23 45.36 NaN 8.07 2023-05-16 13.48 98.25 32.84 45.25 NaN 8.13 2023-05-17 14.35 99.77 32.86 44.95 NaN 8.13 2023-05-18 14.53 102.34 33.43 44.92 NaN 8.26 """ # Let's add some more NaN values: sample.values[3, (1,3), 0] = np.nan sample.values[3, 1:4, 1] = np.nan sample.values[3, :2, 2] = np.nan sample.values[3, 2:, 3] = np.nan sample.values[3, :-1, 5] = np.nan print(sample.sel(field='close').to_pandas()) """ asset NYS:NCLH NYS:ORCL NYS:PRGO NYS:QGEN NYS:RHT NYS:TEVA time 2023-05-12 13.24 97.85 NaN 45.09 NaN NaN 2023-05-15 NaN NaN NaN 45.36 NaN NaN 2023-05-16 13.48 NaN 32.84 NaN NaN NaN 2023-05-17 NaN NaN 32.86 NaN NaN NaN 2023-05-18 14.53 102.34 33.43 NaN NaN 8.26 """ # Interpolate the NaN values: print(sample.interpolate_na('time').sel(field='close').to_pandas()) """ asset NYS:NCLH NYS:ORCL NYS:PRGO NYS:QGEN NYS:RHT NYS:TEVA time 2023-05-12 13.240 97.850000 NaN 45.09 NaN NaN 2023-05-15 13.420 100.095000 NaN 45.36 NaN NaN 2023-05-16 13.480 100.843333 32.84 NaN NaN NaN 2023-05-17 14.005 101.591667 32.86 NaN NaN NaN 2023-05-18 14.530 102.340000 33.43 NaN NaN 8.26 """As you can see, only the NaNs in the first 2 columns are being replaced. The others remain untouched and might be dropped when you use dropna().

Another thing you should keep in mind is that you might introduce lookahead bias with interpoloation, e. g. in a single run backtest. In my example for instance (pretend the NaNs I added were already in the data) you would know on 2023-05-15 that ORCL will rise when in reality you would first know that on 2023-05-18.

-

RE: How to fix this errorposted in Support

Asuming whatever train is has a similar structure as the usual stock data, I get the same error as you with:

import itertools import qnt.data as qndata stocks = qndata.stocks_load_ndx_data(tail=100) for comb in itertools.combinations(stocks.asset, 2): print(stocks.sel(asset=[comb]))There are 2 things to consider:

- comb is a tuple and you can't use tuples as value for the asset argument. You are putting brackets around it, but that gives you a list with one element wich is a tuple, hence the error about setting an array element as a sequence. Using stocks.sel(asset=list(comb)) instead resolves this issue but then you'll get an index error which leads to the second point

- each element in comb is a DataArray and cannot be used as an index element to select from the data. You want the string values instead, for this you can iterate over asset.values for instance.

My example works when the loop looks like this:

for comb in itertools.combinations(stocks.asset.values, 2): print(stocks.sel(asset=list(comb))) -

RE: Pythonposted in General Discussion

I'm a huge fan of Sentdex, he really tought me a lot about Python in his tutorials.

Have a look at his website and his Youtube channel, for instance there's a tutorial for Python beginners. -

RE: Local Development with Notificationsposted in Support

It's safe to ignore these notices but if they bother you, you can set the variables together with your API key using the defaults and the messages go away:

import os os.environ['API_KEY'] = 'YOUR-API-KEY' os.environ['DATA_BASE_URL'] = 'https://data-api.quantiacs.io/' os.environ['CACHE_RETENTION'] = '7' os.environ['CACHE_DIR'] = 'data-cache' -

Fundamental Dataposted in Support

Hello @support

Could you please add CIKs to the NASDAQ100 stock list?

In order to load fundamental data from secgov we need the CIKs for the stocks but they're currently not in the list we get from qnt.data.stocks_load_ndx_list().

Allthough it is still possible to get fundamentals using qnt.data.stocks_load_list(), it takes a little bit acrobatics like this for instance:import pandas as pd import qnt.data as qndata stocks = qndata.stocks_load_ndx_data() df_ndx = pd.DataFrame(qndata.stocks_load_ndx_list()).set_index('symbol') df_all = pd.DataFrame(qndata.stocks_load_list()).set_index('symbol') idx = sorted(set(df_ndx.index) & set(df_all.index)) df = df_ndx.loc[idx] df['cik'] = df_all.cik[idx] symbols = list(df.reset_index().T.to_dict().values()) fundamentals = qndata.secgov_load_indicators(symbols, stocks.time)It would be nice if we could get them with just 2 lines like so:

stocks = qndata.stocks_load_ndx_data() fundamentals = qndata.secgov_load_indicators(qndata.stocks_load_ndx_list(), stocks.time)Also, the workaround doesn't work locally because qndata.stocks_load_list() seems to return the same list as qndata.stocks_load_ndx_list().

Thanks in advance!

-

RE: Local Development Error "No module named 'qnt'"posted in Support

@eddiee Try step 4 without quotes, this should start jupyter notebook. And if that's your real API-key we see in the image, delete your last post. It's a bad idea to post it in a public forum

-

RE: Q17 Neural Networks Algo Template; is there an error in train_model()?posted in Strategy help

Yes, I noticed that too. And after fixing it the backtest takes forever...

Another thing to consider is that it redefines the model with each training but I belive you can retrain already trainded NNs with new Data so they learn based on what they previously learned. -

RE: Weights different in testing and submissionposted in Support

About the slicing error, I had that too a while ago. It took me some time to figure out that it wasn't enough to have the right pandas version in the environment. Because I had another python install with the same version in my PATH, the qntdev-python also looked there and always used the newer pandas. So I placed the -s flag everywhere the qntdev python is supposed to run (PyCharm, Jupyter, terminal) like this

/path/to/quantiacs/python -s strategy.pyOf course one could simply remove the other python install from PATH but I needed it there.

-

RE: Saving and recalling a dictionary of trained modelsposted in Support

@alfredaita

In case you don't want to run init.py every time in order to install external libraries, I came up with a solution for this. You basically install the library in a folder in your home directory and let the strategy create symlinks to the module path at runtime. More details in this post. -

Erroneous Data?posted in Support

Hello @support

Since the live period for the Q16 contest is coming to an end I'm watching my participating algorithms more closely and noticed something odd:

The closing prices are the same on 2022-02-24 and 2022-02-25 to the last decimal for allmost all cryptos (49 out of 54).import qnt.data as qndata crypto = qndata.cryptodaily.load_data(tail=10) c = crypto.sel(field='close').to_pandas().iloc[-3:] liquid = crypto.sel(field='is_liquid').fillna(0).values.astype(bool)[-3:] # only showing the cryptos which were liquid for the last 3 days: c.iloc[:, liquid.all(axis=0)] asset ADA AVAX BNB BTC DOGE DOT ETH LINK SOL XRP time 2022-02-23 0.8664 73.47 365.6 37264.053 0.1274 15.97 2580.9977 13.34 84.64 0.696515 2022-02-24 0.8533 76.39 361.2 38348.744 0.1242 16.16 2598.0195 13.27 89.41 0.696359 2022-02-25 0.8533 76.39 361.2 38348.744 0.1242 16.16 2598.0195 13.27 89.41 0.696359(c.values[-1] == c.values[-2]).sum(), c.shape[1] (49, 54)Could you please have a look?

Thanks! -

RE: External Librariesposted in Support

@support

Yes, pip is way faster. Thanks!

I might have found an even faster solution but I guess I have to wait a few hours to find out if it really works.Here's what I did:

- I created a folder in /root/books called "modules" to install cvxpy there to make it persistent:

!mkdir modules && pip install --target=modules cvxpy- Then if the import fails in the strategy, it creates symbolic links in /usr/local/lib/python3.7/site-packages/ that point to the content of /root/books/modules/

try: import cvxpy as cp except ImportError: import os source = '/root/book/modules/' target = '/usr/local/lib/python3.7/site-packages/' for dirpath, dirnames, filenames in os.walk(source): source_path = dirpath.replace(source, '') target_path = os.path.join(target, source_path) if not os.path.exists(target_path) and not os.path.islink(target_path): os.symlink(dirpath, target_path) continue for file in filenames: source_file = os.path.join(dirpath, file) target_file = os.path.join(target, source_path, file) if not os.path.exists(target_file) and not os.path.islink(target_file): os.symlink(source_file, target_file) import cvxpy as cpCreating the symlinks only takes 0.07 seconds, so fingers crossed

UPDATE (a few hours later):

It actually worked. When I just reopened the strategy, the environment was newly initialized. First I tried just importing cvxpy and got the ModuleNotFoundError. Then I ran the strategy including the code above: cvxpy was imported correctly and the strategy ran.I'm not sure if that solution works for every module because I don't know if pip might also write something to other directories than site-packages.

Anyway, I'm happy with this solution.

Regards